SUSTAINABLE AI & CRYPTO MINING HARDWARE

DESIGNED IN JAPAN

Lenzo builds ultra-efficient hardware designed for modern parallel workloads — from blockchain to AI inference. Our proprietary CGLA architecture delivers breakthrough performance per watt in a compact, cost-effective form.

COMPUTE ENGINES FOR THE AI & CRYPTO FRONTIER

KEY BENEFITS

ABOUT

Crafted in Japan, Lenzo is built by the engineers behind some of the world’s fastest chips—from Sony’s PlayStation CPU/GPU teams to the designers of Fujitsu’s supercomputers. Our founding team includes researchers from NAIST and ITRI Taiwan, with deep expertise in large-scale compute infrastructure. We are system architects and builders, committed to engineering high-performance, energy-efficient hardware for a new generation of workloads.

CAREERS

TECHNICAL RESOURCES

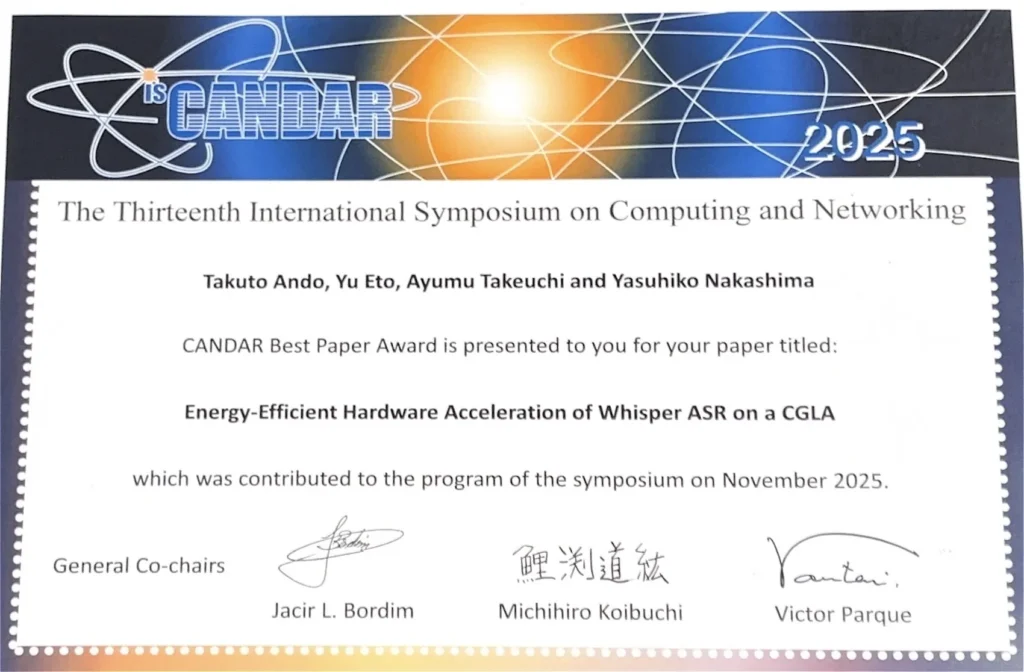

"Energy-Efficient Hardware Acceleration of Whisper ASR on a CGLA" has been selected for the CANDAR Best Paper Award

We are pleased to announce that a paper authored by LENZO's Takuto Ando among others, exploring the core technology of LENZO, "Energy-Efficient Hardware Acceleration of Whisper ASR on a CGLA”, has been selected for The Thirteenth International Symposium on Computing and Networking (CANDAR 2025)'s Best Paper Award during the symposium held in Yamagata from November 25–28, 2025.

CANDAR is a leading international forum covering advanced computing and networking research, spanning theoretical foundations, parallel and distributed systems, hardware architectures, and practical applications. Among numerous submissions, Ando’s work was recognized for its originality, technical contribution, and impact.

Learn more at:

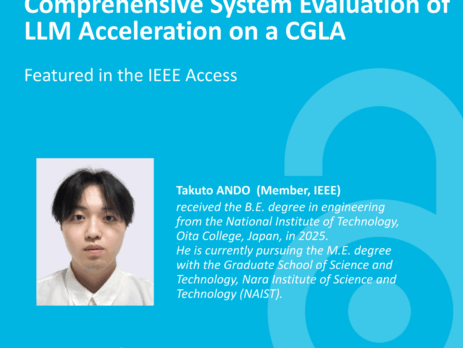

Our CGLA-Based LLM Inference Research Accepted to IEEE Access

We are pleased to share that our collaborative research with NAIST, “Efficient Kernel Mapping and Comprehensive System Evaluation of LLM Acceleration on a CGLA” has been formally accepted to the international journal IEEE Access. You can access the full article for download here.

This work represents the first end-to-end evaluation of Large Language Model (LLM) inference on a non-AI-specialized Coarse-Grained Linear Array (CGLA) accelerator, using the state-of-the-art Qwen3 model family as the benchmark and reinforces the viability of general-purpose CGLA architectures—not just fixed-function ASICs or high-power GPUs—for next-generation LLM inference. It demonstrates that compute efficiency, programmability, and adaptability to changing algorithms can coexist in a reconfigurable architecture.

For LENZO, this is a meaningful milestone in advancing the underlying theory and validation behind our CGLA-based compute vision.

Publication Details

Title:

Efficient Kernel Mapping and Comprehensive System Evaluation of LLM Acceleration on a CGLA

Journal: IEEE Access

DOI: 10.1109/ACCESS.2025.3636266

LENZO to Exhibit at the Japanese Job Expo — Now Hiring SoC Engineers & C/C++ Developers

LENZO is excited to announce that we will be exhibiting at the Japanese Job Expo hosted by ChallengeRocket — a global event connecting leading technology companies with top engineering talent across Europe.

As we continue building next-generation AI and blockchain semiconductor technology designed in Japan, we are expanding our engineering team across Poland, Ukraine, and remote positions worldwide.

This event is an opportunity for talented engineers to meet the LENZO team, learn about our work, and explore open roles in our rapidly growing company.

Open Positions

System on Chip (SoC) Engineer

C/C++ Programmer (Blockchain / Mining Software)

Why Join LENZO?

At LENZO, we’re building CGLA-based compute engines for the next era of AI inference and high-efficiency crypto mining — redefining how circuits are designed, optimized, and deployed. Our engineering culture emphasizes:

- Cutting-edge semiconductor R&D

- Hands-on collaboration between hardware, firmware, and algorithm teams

- Speed, autonomy, and global teamwork

- A chance to shape the world’s next major compute architecture

Meet Us at the Japanese Job Expo

If you’re an engineer passionate about advanced compute hardware, mining systems, or low-level software, we’d love to meet you.

Event: Japanese Job Expo

Event Link: https://challengerocket.com/japanese-job-expo

Come learn more about our work and explore opportunities to join the team behind Japan’s next-generation AI & crypto semiconductor.

LLMs Running on CGLA

In this technical blog, Yoshifumi Munakata outlines recent progress made by LENZO's LLM Team in getting LLM's running on CGLA.

The rise of the token-driven digital economy demands a new class of compute architecture—one capable of delivering high performance, ultra-low power consumption, and seamless scalability. At LENZO, we are developing exactly that with CGLA (Coarse-Grained Linear Array), our next-generation compute engine designed to accelerate the AI workloads that power modern applications.

Among the most important of these applications are Transformer-based large language models (LLMs) such as ChatGPT, Gemini, and Llama. These models define today’s AI landscape—and we are proud to share that CGLA now runs full Transformer-based inference.

Running Llama on CGLA

The LENZO LLM Team has successfully brought Llama—one of the world’s most widely adopted open-source LLM families—to run natively on our CGLA architecture.

This achievement demonstrates:

- CGLA’s compatibility with mainstream Transformer architectures

- CGLA’s ability to execute real-world, high-demand inference workloads

- A clear path toward accelerating any Llama.cpp-based model, including Llama, Qwen, DeepSeek, Gemma, and others

Whether users choose a small quantized model or a larger configuration, CGLA handles the full inference pipeline, from prompt processing to token generation.

API Access: OpenAI-Compatible Interface

To make CGLA easy for developers to use, we integrated CGLA with the server functionality of the Llama.cpp ecosystem and exposed it through an OpenAI-compatible API interface.

This means:

You can call CGLA inference using the standard OpenAI Python client.

Developers can run CGLA inference from:

- Python applications

- Web apps

- Terminal scripts

- Custom services using the OpenAI API schema

- Any tool expecting an OpenAI-style completion/chat endpoint

The experience mirrors existing LLM workflows, but the underlying computation runs entirely on CGLA.

Example: Querying Qwen 1.5B on CGLA through the API

From a browser or Python script, a user sends:

Prompt: “What is a CPU?”

Model: Qwen 1.5B (quantized)

CGLA processes the input, runs inference using the model, and returns the generated response—just like any cloud LLM, but powered by our custom hardware.

Users can freely choose any Llama.cpp-compatible model, including Llama, Gemma, DeepSeek, and others.

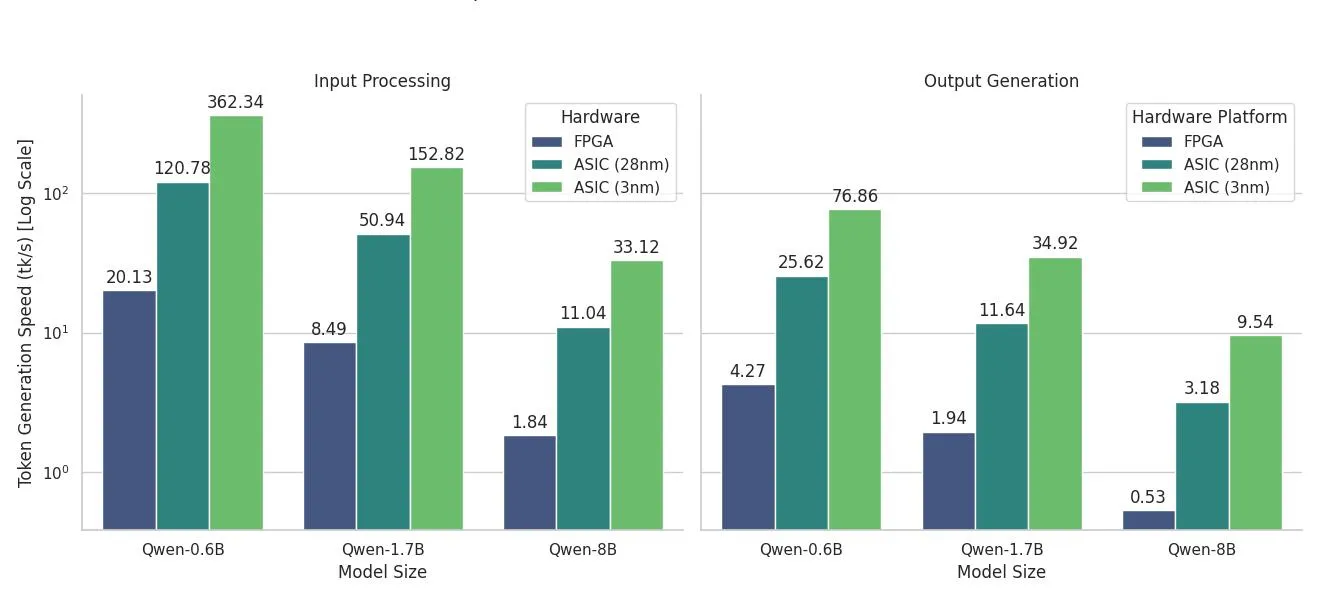

Performance of Transformer Inference on CGLA

Inference performance on accelerators is typically measured in:

- Input Processing Speed

- Token Generation Speed

Across multiple quantized models, CGLA demonstrates strong generation performance, with projected gains as we advance toward our 28 nm and 3 nm ASIC implementations. Where CGLA truly stands out is power efficiency. Compared with an RTX 4090 GPGPU, CGLA delivers:

- Up to 44.4× improvement in PDP

- Up to 11.5× improvement in EDP

These efficiency gains directly translate to lower operating costs and more sustainable large-scale deployments.

The Road Ahead

With Llama, Qwen, and other open-source LLMs already running on CGLA and accessible through an OpenAI-compatible API, we are now focused on two goals:

1. Becoming a Hugging Face Inference Provider

Allowing anyone worldwide to run Transformer inference on CGLA instantly through the platform.

2. Achieving even higher speed and lower power consumption for CGLA-based LLM inference

Through continued architectural refinements and ASIC development.

We appreciate your support as LENZO builds the future of compute—one that is open, efficient, and optimized for the AI systems shaping the coming decade.

Thank you for following the work of the LENZO LLM Team!

INVESTORS

Investing in the Future of Intelligent Compute

As the rapid expansion of generative AI accelerates competition in algorithms, a new frontier is emerging in the optimization of computational circuits and energy efficiency. We hold great expectations that Japan-based LENZO will drive a game-changing breakthrough with its innovative semiconductors and establish a strong presence on the global stage.

LENZO’s core technology, CGLA, is an advanced innovation with the potential to address one of the most pressing challenges in cryptocurrency mining—energy consumption. Just as the PlayStation once transformed the gaming industry with its proprietary semiconductors, we have great expectations that Japan-born LENZO will revolutionize the computational foundation of blockchain and evolve into a world-leading AI semiconductor company.

Serial entrepreneur Kenshin Fujiwara has chosen his next challenge in the rapidly expanding global AI and crypto markets. Building on an architecture developed at Nara Institute of Science and Technology (NAIST), LENZO is developing semiconductor chips tailored for AI and crypto applications. Its vertically integrated business model, which combines Japan’s traditional strengths in hardware and software, is truly unique and holds strong potential to compete on the global stage.

Ready to power the next era of intelligent infrastructure?